It may analyze vast quantities of data from previous conversations to establish patterns and developments which helps it ship personalized experiences to each consumer. Sentiment evaluation features a narrative mapping in real-time that helps the chatbots to know some particular words or sentences. Words like "a" and "the" appear usually. Stemming and lemmatization are provided by libraries like spaCy and NLTK. That is obvious in languages like English, the place the end of a sentence is marked by a interval, but it is still not trivial. The method turns into even more advanced in languages, equivalent to ancient Chinese, that don’t have a delimiter that marks the tip of a sentence. Then we might imagine that beginning from any point on the plane we’d always want to find yourself on the closest dot (i.e. we’d all the time go to the closest coffee shop). For example, "university," "universities," and "university’s" would possibly all be mapped to the bottom univers.

Various methods could also be used on this information preprocessing: Stemming and lemmatization: Stemming is an informal strategy of changing words to their base kinds utilizing heuristic guidelines. Tokenization splits text into particular person phrases and word fragments. Word2Vec, introduced in 2013, makes use of a vanilla neural community to learn high-dimensional phrase embeddings from raw textual content. Newer methods embrace Word2Vec, GLoVE, and studying the options during the training process of a neural community. DynamicNLP leverages intent names (not less than 3 words) and one training sentence to create coaching information within the form of generated attainable consumer utterances. Transformers are mainly generated by natural language processing and are characterised by the fact that the enter sequences are variable in length and, unlike photos, there is no such thing as a way to easily resize them. Deep-studying models take as input a word embedding and, at every time state, return the probability distribution of the next phrase as the likelihood for each word in the dictionary. After this detailed dialogue of the two approaches, it's time for a quick abstract. Over the past couple of years, from the time we began working in Chatbot application Development, we've constructed a wide variety of Chatbot purposes for a number of businesses.

Various methods could also be used on this information preprocessing: Stemming and lemmatization: Stemming is an informal strategy of changing words to their base kinds utilizing heuristic guidelines. Tokenization splits text into particular person phrases and word fragments. Word2Vec, introduced in 2013, makes use of a vanilla neural community to learn high-dimensional phrase embeddings from raw textual content. Newer methods embrace Word2Vec, GLoVE, and studying the options during the training process of a neural community. DynamicNLP leverages intent names (not less than 3 words) and one training sentence to create coaching information within the form of generated attainable consumer utterances. Transformers are mainly generated by natural language processing and are characterised by the fact that the enter sequences are variable in length and, unlike photos, there is no such thing as a way to easily resize them. Deep-studying models take as input a word embedding and, at every time state, return the probability distribution of the next phrase as the likelihood for each word in the dictionary. After this detailed dialogue of the two approaches, it's time for a quick abstract. Over the past couple of years, from the time we began working in Chatbot application Development, we've constructed a wide variety of Chatbot purposes for a number of businesses.

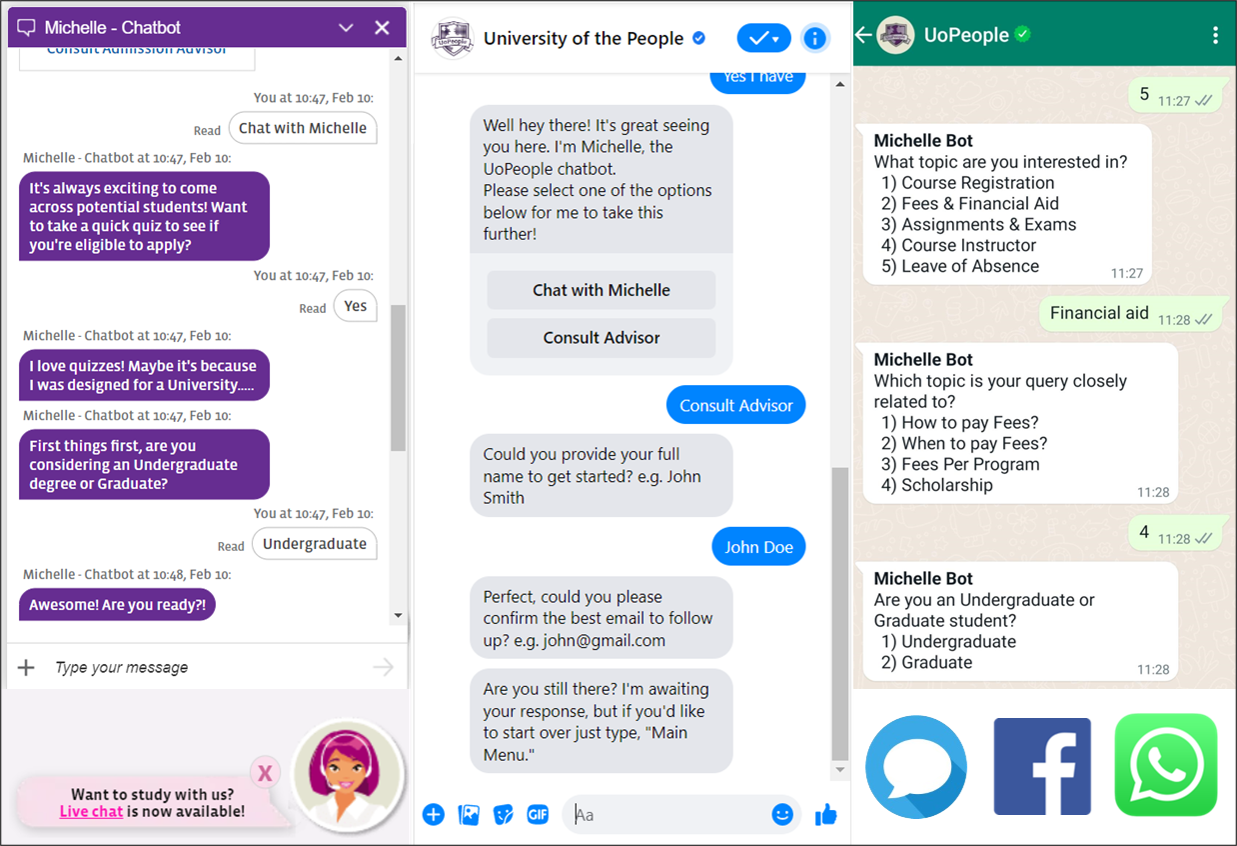

In today’s digital era, where communication and automation play an important function, chatbots have emerged as highly effective instruments for businesses and individuals alike. Secondly, chatbots are generally designed to handle simple and repetitive tasks. However, AI chatbot websites excel in multitasking and might handle an infinite variety of queries simultaneously. Bag-of-Words: Bag-of-Words counts the variety of times every phrase or n-gram (combination of n words) seems in a document. The result typically consists of a phrase index and tokenized textual content wherein phrases may be represented as numerical tokens for use in varied deep learning strategies. NLP architectures use numerous methods for data preprocessing, function extraction, and modeling. It’s useful to think of these strategies in two categories: Traditional machine studying strategies and deep learning strategies. To evaluate a word’s significance, we consider two things: Term Frequency: How important is the phrase within the document? It comes in two variations: Skip-Gram, wherein we try to foretell surrounding phrases given a target word, and Continuous Bag-of-Words (CBOW), which tries to foretell the goal phrase from surrounding phrases. While dwell agents usually attempt to attach with clients and personalize their interactions, NLP in customer support boosts chatbots and voice bots to do the same.

In today’s digital era, where communication and automation play an important function, chatbots have emerged as highly effective instruments for businesses and individuals alike. Secondly, chatbots are generally designed to handle simple and repetitive tasks. However, AI chatbot websites excel in multitasking and might handle an infinite variety of queries simultaneously. Bag-of-Words: Bag-of-Words counts the variety of times every phrase or n-gram (combination of n words) seems in a document. The result typically consists of a phrase index and tokenized textual content wherein phrases may be represented as numerical tokens for use in varied deep learning strategies. NLP architectures use numerous methods for data preprocessing, function extraction, and modeling. It’s useful to think of these strategies in two categories: Traditional machine studying strategies and deep learning strategies. To evaluate a word’s significance, we consider two things: Term Frequency: How important is the phrase within the document? It comes in two variations: Skip-Gram, wherein we try to foretell surrounding phrases given a target word, and Continuous Bag-of-Words (CBOW), which tries to foretell the goal phrase from surrounding phrases. While dwell agents usually attempt to attach with clients and personalize their interactions, NLP in customer support boosts chatbots and voice bots to do the same.

Not only is AI and NLU being used in chatbots that permit for better interactions with clients but AI and NLU are also being utilized in agent AI language model assistants that help assist representatives in doing their jobs better and extra efficiently. Techniques, corresponding to named entity recognition and intent classification, are generally utilized in NLU. Or, for named entity recognition, we can use hidden Markov fashions along with n-grams. Decision bushes are a class of supervised classification fashions that break up the dataset based mostly on totally different options to maximize info acquire in those splits. NLP models work by discovering relationships between the constituent parts of language - for instance, the letters, words, and sentences found in a textual content dataset. Feature extraction: Most typical machine-learning techniques work on the features - typically numbers that describe a document in relation to the corpus that contains it - created by both Bag-of-Words, TF-IDF, or generic characteristic engineering similar to document length, word polarity, and metadata (as an illustration, if the textual content has associated tags or scores). Inverse Document Frequency: How vital is the term in the whole corpus? We resolve this problem by utilizing Inverse Document Frequency, which is excessive if the word is uncommon and low if the phrase is widespread throughout the corpus.

In case you liked this short article as well as you want to be given guidance with regards to

شات جي بي تي مجانا kindly visit our web-site.